Introduction

Content moderation platforms are comprehensive software solutions that allow organizations to monitor, filter, and manage user-generated content across text, images, video, and audio. These platforms act as a shield, protecting online communities from harassment, hate speech, scams, and adult content. By integrating advanced machine learning (ML) and Large Language Models (LLMs), modern moderation tools can “read” the intent behind a post, rather than just scanning for banned words.

The importance of these tools is underscored by the global tightening of digital safety laws, such as the EU’s Digital Services Act (DSA) and similar frameworks worldwide. Without a robust moderation platform, companies risk heavy fines, platform bans, and a “toxic” reputation that can alienate users and advertisers alike. Key evaluation criteria for these tools include multimodal support (the ability to check text and images simultaneously), latency (speed of detection), and “human-in-the-loop” (HITL) capabilities that allow human moderators to handle the most complex, nuanced cases.

Best for: Social media networks, online gaming communities, e-commerce marketplaces, dating apps, and any digital platform where users interact publicly. It is essential for Trust & Safety teams, Community Managers, and Compliance Officers in mid-to-large enterprises.

Not ideal for: Private, small-scale internal messaging apps where users are already vetted, or static websites with no user interaction. It may also be overkill for blogs that only receive a handful of comments per month, which can be handled manually.

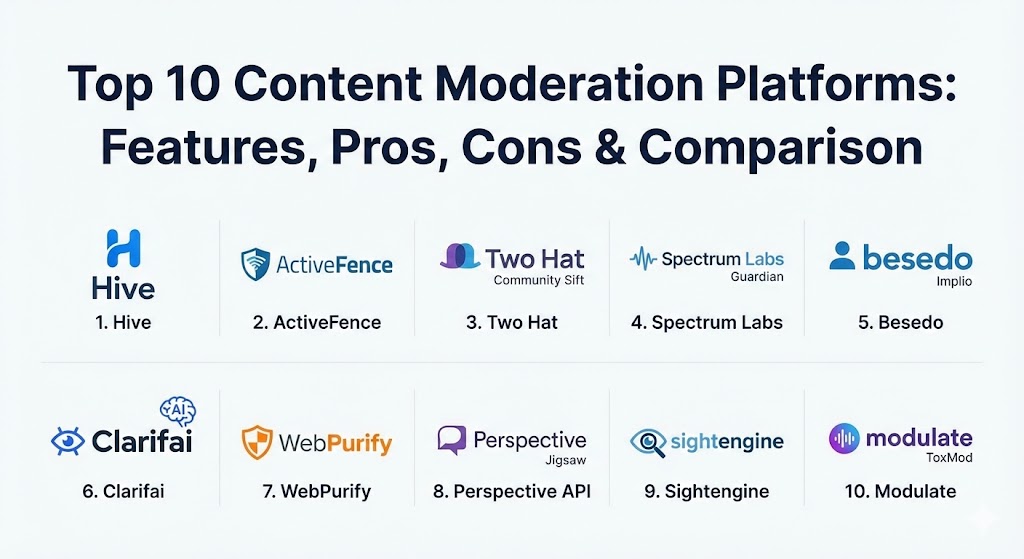

Top 10 Content Moderation Platforms

1 — Hive

Hive is widely considered the industry leader in automated content moderation. It offers a massive suite of pre-trained AI models that can identify everything from NSFW imagery to AI-generated “deepfake” text and visuals.

- Key features:

- Multimodal detection across text, image, video, and audio.

- Specialized models for detecting AI-generated content (Deepfake detection).

- High-speed API with sub-second latency for real-time applications.

- Demographic and celebrity recognition for brand safety.

- Advanced “Sponsorship” detection to ensure ad compliance.

- Developer-friendly documentation with easy SDK integration.

- Pros:

- Extremely high accuracy due to training on billions of proprietary data points.

- Highly scalable, making it the choice for some of the world’s largest apps.

- Cons:

- Pricing can scale rapidly with high volume, making it expensive for startups.

- The “black box” nature of some models makes fine-tuning difficult for niche policies.

- Security & compliance: SOC 2 Type II, GDPR, HIPAA, and ISO 27001 compliant. Supports SSO and granular API key management.

- Support & community: Dedicated account managers for enterprise clients; extensive API documentation and a developer portal.

2 — ActiveFence

ActiveFence is an enterprise-grade trust and safety platform that goes beyond simple filtering. It provides proactive threat intelligence by monitoring “malicious actors” across the dark web to predict and prevent harm before it hits your platform.

- Key features:

- Proactive threat intelligence and detection of coordinated attacks.

- ActiveOS: A unified dashboard for human moderators to manage queues.

- Cross-platform risk assessment (detecting a bad actor across multiple sites).

- Support for 100+ languages with deep cultural nuance.

- Integrated “Red Teaming” for generative AI safety.

- Detailed audit logs and compliance reporting for global regulations.

- Pros:

- Best-in-class for detecting complex harms like radicalization and grooming.

- The unified workflow tool (ActiveOS) significantly boosts moderator efficiency.

- Cons:

- High barrier to entry in terms of cost and integration complexity.

- May provide “too much data” for small teams that only need basic filtering.

- Security & compliance: GDPR, CCPA, and DSA compliance ready; SOC 2 and high-level data encryption.

- Support & community: Comprehensive onboarding and ongoing strategic consulting from trust and safety experts.

3 — Two Hat (by Microsoft)

Two Hat, now part of Microsoft and often associated with the Community Sift brand, is a veteran in the social and gaming space. It is specifically designed to handle the high-velocity, slang-heavy environment of live chat.

- Key features:

- Real-time chat filtering with context-aware “closeness” scores.

- Advanced username and profile moderation to prevent bypasses.

- User reputation systems that track behavior over time.

- Multilingual support including regional dialects and “leetspeak.”

- Automated “nudging” to encourage positive user behavior in real-time.

- Robust reporting for community health and toxicity trends.

- Pros:

- Exceptional at managing the unique challenges of gaming communities.

- Highly customizable policies that can evolve with community slang.

- Cons:

- Integration with non-Microsoft environments can sometimes feel less seamless.

- The administrative interface is powerful but has a steep learning curve.

- Security & compliance: ISO 27001, HIPAA, and GDPR compliant. Integrated with Microsoft’s enterprise security stack.

- Support & community: Access to Microsoft’s global support network and a deep bench of gaming industry experts.

4 — Spectrum Labs (Guardian)

Spectrum Labs focuses on “contextual AI,” moving away from simple keyword matches to understand the intent behind a conversation. Their Guardian platform is built to identify harassment, hate speech, and fraud.

- Key features:

- Behavior-based detection rather than word-based detection.

- Real-time toxicity scoring for every user interaction.

- Automated actions (hide, delete, warn) based on custom thresholds.

- Advanced fraud and scam detection for marketplaces.

- Multilingual coverage with a focus on intent and sentiment.

- Integrated human-review workflow with AI-assisted tagging.

- Pros:

- Significantly lower false-positive rates compared to traditional filters.

- Excellent for identifying subtle forms of harassment that keywords miss.

- Cons:

- Pricing is generally opaque and aimed at the enterprise market.

- Requires a significant amount of initial “tuning” to match your brand voice.

- Security & compliance: SOC 2, GDPR, and CCPA compliant. Supports encrypted data ingestion.

- Support & community: High-touch customer success teams and detailed technical integration guides.

5 — Besedo (Implio)

Besedo is a unique player that offers both a powerful moderation platform (Implio) and a massive global workforce of human moderators. They specialize in the “Human-AI Hybrid” approach.

- Key features:

- Implio platform for unified automated and manual moderation.

- Tailored models for e-commerce, dating, and marketplaces.

- Automated fraud and scam detection specific to classified ads.

- Integrated human review services with 24/7 global coverage.

- Real-time performance analytics for both AI and human teams.

- Customizable rule-builder for quick policy adjustments.

- Pros:

- A “one-stop shop” for companies that need both software and people.

- Industry-leading expertise in marketplace quality and fraud prevention.

- Cons:

- The software interface (Implio) can feel less “AI-first” than competitors like Hive.

- Human moderation costs can fluctuate based on volume surges.

- Security & compliance: GDPR compliant; ISO 27001 certified. Robust data privacy protocols for human reviewers.

- Support & community: Strong focus on partnership; offers consultancy on moderation policy design.

6 — Clarifai

Clarifai is an AI platform that gives organizations the tools to build and train their own custom moderation models. It is ideal for companies with highly specific or niche moderation needs.

- Key features:

- Custom model training for unique visual or text-based content.

- Support for computer vision, NLP, and audio recognition.

- “Portal” interface for managing data labeling and model versions.

- Scalable API that can be deployed on-premise or in the cloud.

- Pre-built models for NSFW, weapons, drugs, and gore.

- Advanced search and discovery tools for large content libraries.

- Pros:

- Unrivaled flexibility; you aren’t stuck with “off-the-shelf” logic.

- Strong performance in visual AI, including video and live streams.

- Cons:

- Requires internal AI/ML expertise to truly get the most out of the platform.

- Implementation takes longer than “plug-and-play” solutions.

- Security & compliance: SOC 2, HIPAA, and GDPR. Offers air-gapped deployment for high-security needs.

- Support & community: Active developer community and comprehensive documentation for custom builds.

7 — WebPurify

WebPurify is the “friendly” face of content moderation, offering straightforward APIs and a transparent pricing model that appeals to both startups and established brands.

- Key features:

- Simple REST API for text, image, and video moderation.

- “Live” human moderation services with guaranteed SLAs (often under 5 minutes).

- Customizable profanity filters with “intelligent” replacement options.

- Specialized “Children’s Safety” moderation services.

- AI-based detection for nudity, hate symbols, and weapons.

- Easy integration with major CMS platforms like WordPress.

- Pros:

- Highly accessible pricing; one of the few with a clear pay-as-you-go model.

- Very fast to implement; a developer can have it running in an hour.

- Cons:

- Lacks the deep “threat intelligence” and proactive features of ActiveFence.

- The dashboard is functional but basic compared to enterprise competitors.

- Security & compliance: GDPR and HIPAA compliant. Data is processed in secure facilities.

- Support & community: Responsive email support and a straightforward knowledge base.

8 — Perspective API (by Google/Jigsaw)

Perspective is a free (or low-cost) API developed by Google’s Jigsaw team. It focuses purely on “Toxicity” scoring for text, helping publishers foster better conversations.

- Key features:

- Toxicity, insult, threat, and profanity scoring.

- Trained on millions of comments from diverse online sources.

- “Nudge” integration: Show users their toxicity score before they post.

- Simple API that returns a probability score from 0 to 1.

- Multilingual support for major global languages.

- Completely free for most non-commercial and research use cases.

- Pros:

- Zero cost for many users, making it a great “first step” in moderation.

- Backed by Google’s massive research and machine learning infrastructure.

- Cons:

- Limited to text only; no support for images or video.

- Does not offer a management UI or human review queue.

- Security & compliance: Google Cloud’s standard security and privacy frameworks; GDPR compliant.

- Support & community: Open-source community on GitHub and extensive public documentation.

9 — Sightengine

Sightengine is a developer-centric platform that provides incredibly fast, specialized APIs for visual content moderation. It is often the “engine” behind the scenes of popular social apps.

- Key features:

- Real-time image and video analysis (nudity, gore, fraud, text).

- Specialized detection for “Face Quality” and “Identity Verification.”

- Deepfake and synthetic image detection.

- “Aesthetic” scoring to help platforms prioritize high-quality content.

- OCR (Optical Character Recognition) to find text hidden in images.

- Detailed JSON responses with precise coordinates for detected objects.

- Pros:

- Lightning-fast response times; ideal for live video applications.

- Clear, developer-friendly documentation and predictable pricing.

- Cons:

- It is an API service first; there is no “inbox” for human moderators.

- Limited text moderation capabilities compared to Hive or Spectrum.

- Security & compliance: GDPR compliant. Data is not stored by default after processing.

- Support & community: Excellent technical support directly from the engineers who build the product.

10 — Modulate (ToxMod)

As voice-chat becomes the standard in gaming and VR, Modulate has filled a critical gap with ToxMod—the world’s leading proactive voice moderation solution.

- Key features:

- Real-time audio moderation that “listens” for toxicity and harassment.

- Emotion detection: Can distinguish between excited yelling and aggressive shouting.

- Privacy-first architecture that processes data without recording everything.

- Behavioral insights: Tracks problematic users across voice sessions.

- Seamless integration with major game engines (Unity, Unreal).

- Automatic flagging of high-risk incidents for human review.

- Pros:

- The only viable solution for large-scale, real-time voice moderation.

- Drastically reduces toxicity in gaming environments where text chat is absent.

- Cons:

- Very niche; not useful for platforms that don’t have voice features.

- High technical complexity to integrate into a live audio pipeline.

- Security & compliance: SOC 2, COPPA (Children’s Online Privacy Protection), and GDPR compliant.

- Support & community: Dedicated integration engineers for gaming and metaverse developers.

Comparison Table

| Tool Name | Best For | Platform(s) Supported | Standout Feature | Rating (Gartner Peer Insights) |

| Hive | Scale & Multimodal | API, Web, Mobile | AI/Deepfake Detection | 4.7 / 5 |

| ActiveFence | Proactive Threats | Web Dashboard, API | Threat Intelligence Feeds | 4.8 / 5 |

| Two Hat | Gaming Chat | Xbox, PC, Mobile | Real-time Behavioral Nudge | 4.5 / 5 |

| Spectrum Labs | Contextual NLP | API, Web | Intent-Based Toxicity | 4.4 / 5 |

| Besedo | Marketplaces | Web, Mobile, API | Human-AI Hybrid Service | 4.3 / 5 |

| Clarifai | Custom AI Models | Cloud, On-Prem, API | Model Training Portal | 4.6 / 5 |

| WebPurify | SMBs / Startups | API, WordPress | Fast Human Review SLAs | 4.4 / 5 |

| Perspective API | Toxicity Scoring | API | Free Toxicity Tiers | 4.2 / 5 |

| Sightengine | Visual API | API, Mobile | High-Speed Visual Detection | 4.5 / 5 |

| Modulate | Voice & VR | Unity, Unreal, API | Real-time Voice Moderation | 4.7 / 5 |

Evaluation & Scoring of Content Moderation Platforms

When selecting a content moderation platform, organizations must weigh technical performance against operational ease. A tool that detects everything but has a 10-second delay is useless for live chat, while a fast tool with 50% accuracy will frustrate users.

| Category | Weight | Evaluation Criteria |

| Core Features | 25% | Multimodal support (Text/Image/Video/Voice), deepfake detection, and language coverage. |

| Ease of Use | 15% | Clarity of the admin dashboard, ease of rule-building, and quality of documentation. |

| Integrations | 15% | Quality of APIs, SDKs for mobile, and compatibility with CMS/Gaming engines. |

| Security | 10% | Compliance certifications (SOC 2, GDPR), encryption, and data residency options. |

| Performance | 10% | API latency (must be sub-second for chat) and system uptime/reliability. |

| Support | 10% | Availability of human-in-the-loop services and responsive technical support. |

| Price / Value | 15% | Transparency of pricing and cost-effectiveness at massive scale. |

Which Content Moderation Platforms Tool Is Right for You?

The “right” platform depends on where your content is created and who is creating it.

- Solo Users & Small Creators: If you are a single developer or a small blogger, Perspective API or the basic tier of WebPurify are the best starting points. They offer low-to-zero cost and handle the “low hanging fruit” of profanity and spam.

- Startups & SMBs: If you need to protect a growing community without a dedicated AI team, WebPurify or Sightengine provide the best “plug-and-play” experience with predictable costs.

- Mid-Market & Specialized Platforms: For dating apps or marketplaces, Besedo is often the winner because they understand the specific “scam” patterns of those industries. For gaming apps, Two Hat or Modulate are essential for handling live chat and voice.

- Enterprise & Global Brands: Large social networks or news organizations require the power of Hive or ActiveFence. These tools offer the scale and proactive intelligence needed to handle billions of posts while staying compliant with the EU’s DSA and other global regulations.

- Custom AI Needs: If your brand has a very specific “gray area” (e.g., a medical site that needs to allow certain graphic images but ban others), Clarifai allows you to build a custom model that follows your exact logic.

Frequently Asked Questions (FAQs)

1. Is AI content moderation 100% accurate?

No. While AI has reached incredible levels of accuracy (often 95%+), it still struggles with sarcasm, cultural nuance, and “coded” language. This is why most enterprises use a “hybrid” model with human reviewers.

2. Can these tools moderate live video streams?

Yes. Modern platforms like Sightengine and Hive can analyze video frames in real-time. However, this is significantly more resource-intensive and expensive than moderating static images.

3. Do content moderation tools support multiple languages?

Most leading platforms support at least 50+ languages. Tools like ActiveFence and Spectrum Labs are particularly strong at understanding the cultural context and slang of non-English languages.

4. What is a “Human-in-the-Loop” (HITL)?

HITL is a workflow where the AI flags content it is unsure about (e.g., 60% probability of a violation) and sends it to a human moderator for a final decision, ensuring higher accuracy.

5. How do these tools handle “Deepfakes”?

In 2026, many tools (like Hive) have added specific models that look for digital artifacts and inconsistencies that identify a photo or video as being AI-generated rather than real.

6. Is it better to build or buy a moderation system?

For 99% of companies, “buying” (using an API) is better. Building a high-accuracy AI model requires millions of labeled data points and a dedicated team of data scientists.

7. How much does a content moderation platform cost?

Pricing varies from free (Perspective API) to several cents per item (WebPurify) to custom enterprise contracts (ActiveFence) that can range from $10,000 to $100,000+ per year.

8. Can moderation tools prevent “Shadow Banning”?

Tools provide the data to ban or hide users, but “shadow banning” is a platform policy. Most modern tools emphasize transparency, providing clear reasons why a post was flagged to help with user appeals.

9. Are these tools compliant with the EU Digital Services Act (DSA)?

Top-tier platforms like ActiveFence and Hive are designed with the DSA in mind, providing the detailed audit logs and transparency reporting required by European regulators.

10. What is “Real-time Nudging”?

It is a feature where the platform alerts a user as they are typing that their comment might be toxic, giving them a chance to rephrase it before it is even posted.

Conclusion

Choosing a content moderation platform in 2026 is no longer just about filtering bad words; it’s about choosing a partner to safeguard your community’s future. The “best” tool isn’t necessarily the one with the most features, but the one that aligns with your specific content types and community guidelines. Whether you prioritize the lightning speed of Sightengine, the deep intelligence of ActiveFence, or the human touch of Besedo, the goal remains the same: creating a digital space where users feel safe, heard, and respected.